Posts tagged distributed

Fine Performance Metrics and Spans

- 23 August 2023

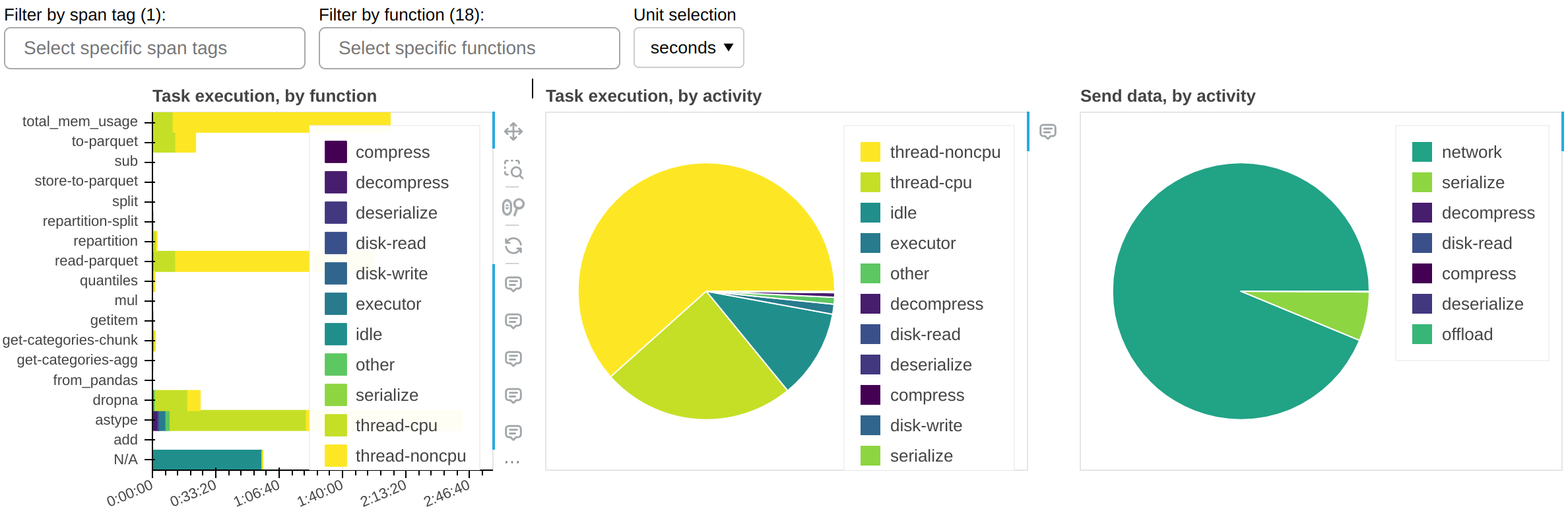

While it’s trivial to measure the end-to-end runtime of a Dask workload, the next logical step - breaking down this time to understand if it could be faster - has historically been a much more arduous task that required a lot of intuition and legwork, for novice and expert users alike. We wanted to change that.

Observability for Distributed Computing with Dask

- 16 May 2023

Debugging is hard. Distributed debugging is hell.

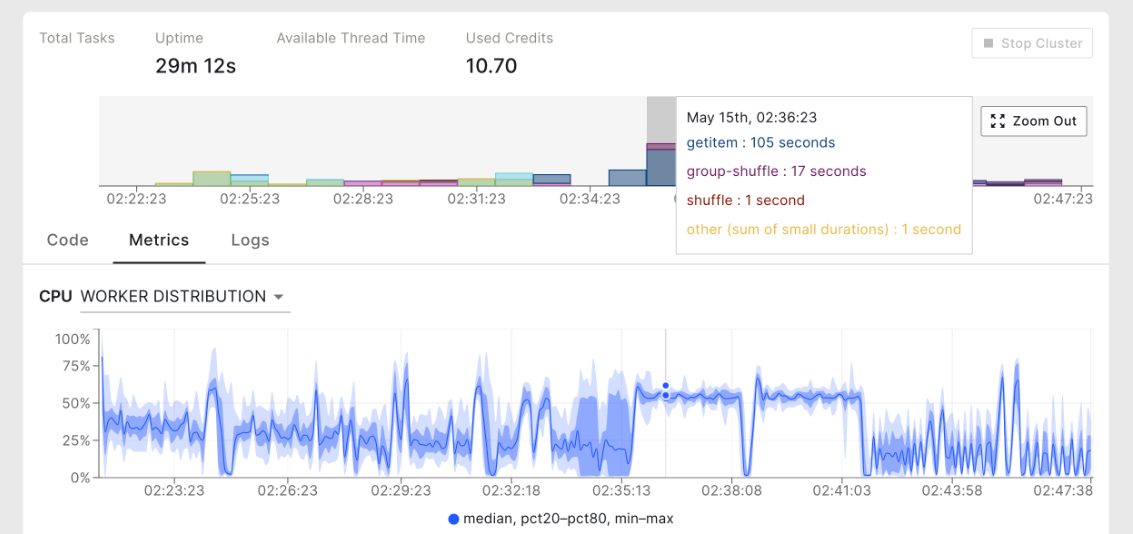

When dealing with unexpected issues in a distributed system, you need to understand what and why it happened, how interactions between individual pieces contributed to the problems, and how to avoid them in the future. In other words, you need observability. This article explains what observability is, how Dask implements it, what pain points remain, and how Coiled helps you overcome these.

Performance testing at Coiled

- 05 May 2023

At Coiled we develop Dask and automatically deploy it to large clusters of cloud workers (sometimes 1000+ EC2 instances at once!). In order to avoid surprises when we publish a new release, Dask needs to be covered by a comprehensive battery of tests — both for functionality and performance.

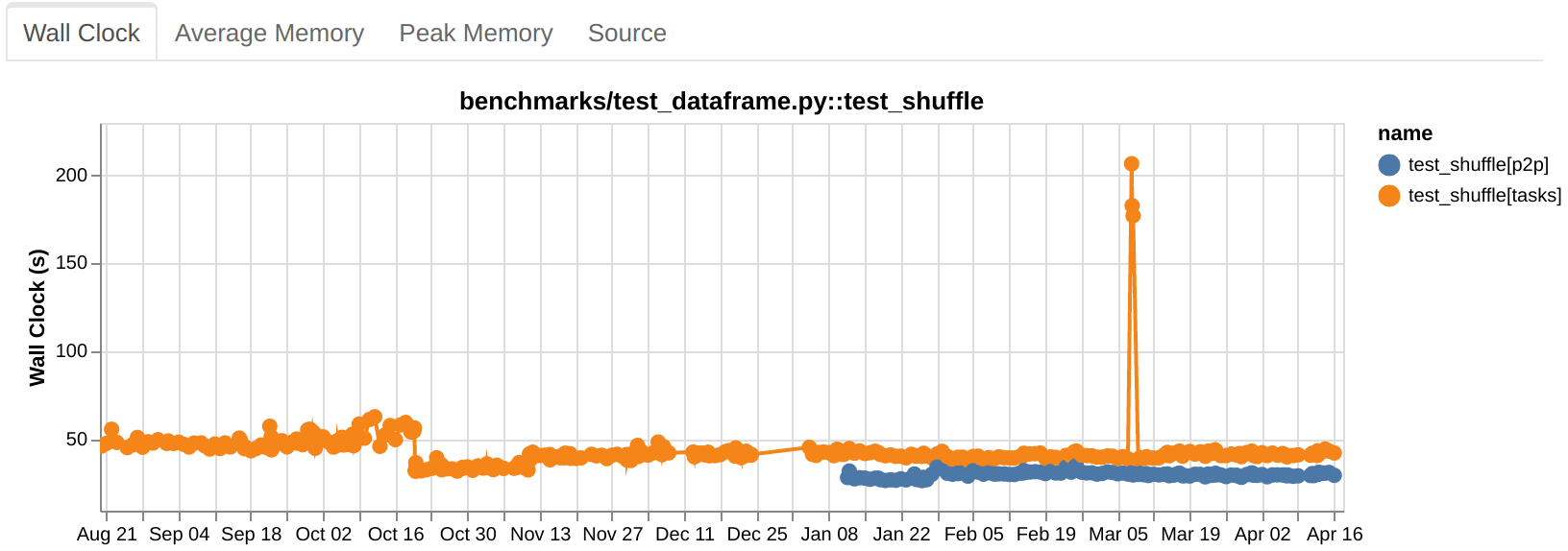

Shuffling large data at constant memory in Dask

- 15 March 2023

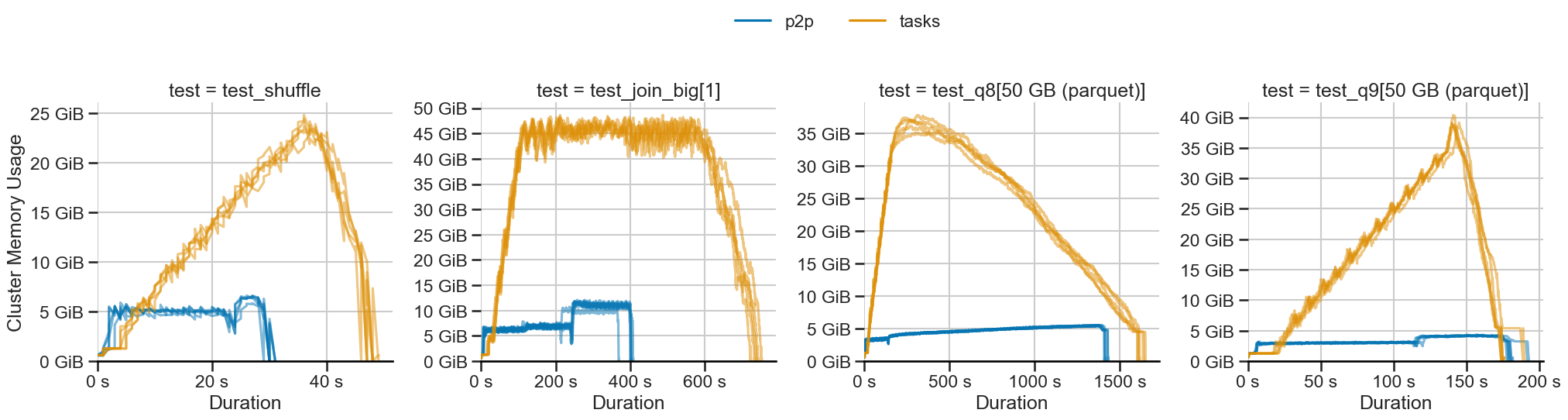

With release 2023.2.1, dask.dataframe introduces a new shuffling method called P2P, making sorts, merges, and joins faster and using constant memory.

Benchmarks show impressive improvements: